As usual when Karl Guttag looks into a headset it’s a very detailed overview of all kinds of optical and image-related (qualitative) measurements and comparisons. Probably only interested for the real XR nerds ![]()

Some excerpts:

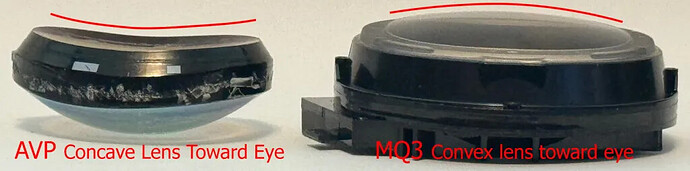

Amazing difference in lens design between the AVP (left) and Meta Quest 3 (right). And he shows the effect of removing the face shield from the AVP to get a much wider field-of-view as you can get your eyes closer to the lenses. This has been reported online by several people that tried it as well.

Apple is using eye tracking to correct the opt ics in addition to foveated rendering, and most of the time, thanks to eye tracking and processing technology, the user will be unaware of all the dynamic corrections being applied.

there is definitely color uniformity problems with the AVP that I see with my eyes as well. There is a cyan ring (lack of red) on the outside of every image, and the center of the screen has splotches of color (most often red/pink).

The amount of color variation is not noticeable in typical colorful scenes like movies and photographs. Still, it is noticeable when displaying mostly white screens, as commonly occurs with web browsing, word processing, or spreadsheets.

It is also known that the AVP’s eye tracking is used to try to correct for color variation. I have seen some bizarre color effects when eye tracking is lost.

On pixel density:

The result I get is that there are about 44.4 pixels per degree (PPD) in the center of the image.

Having ~44.4 ppd gives (confirmed by looking at a virtual Snellen eye chart) about 20/30 vision in the center. This is the best case in the center of the screen, directly, not through the cameras, which are worse (more like 20/35 to 20/40).

Content getting scaled larger and with higher contrast for better (perceived) resolution?

The AVP processes and often over-processes images. The AVP defaults make everything BIG, whether they are AVP native or Macbook mirrored. I see behavior as a “trick” to make the AVP’s resolution seem better. In the case of native windows, I had to fix the window and then move back from it to work around these limitations. There are fewer restrictions on MacBook mirroring window sizes, but the default is to make windows and their content bigger.

The AVP also likes to try to improve contrast and will oversize the edges of small things like text, which makes everything look like it was printed in BOLD. While this may make things easier to read, it is not a faithful representation of what is meant to be displayed. This problem occurs both with “native” rendering (drawing a spreadsheet) as well as when displaying a bitmapped image. As humans perceive better contrasts as having higher resolution, making things bolder is another processing trick to give the impression of higher resolution.

Interesting information quoted from an article by The Verge on display mirroring and scaling from a Mac laptop to the AVP:

“There is a lot of very complicated display scaling going on behind the scenes here, but the easiest way to think about it is that you’re basically getting a 27-inch Retina display, like you’d find on an iMac or Studio Display. Your Mac thinks it’s connected to a 5K display with a resolution of 5120 x 2880, and it runs macOS at a 2:1 logical resolution of 2560 x 1440, just like a 5K display. (You can pick other resolutions, but the device warns you that they’ll be lower quality.) That virtual display is then streamed as a 4K 3560 x 2880 video to the Vision Pro, where you can just make it as big as you want. The upshot of all of this is that 4K content runs at a native 4K resolution — it has all the pixels to do it, just like an iMac — but you have a grand total of 2560 x 1440 to place windows in, regardless of how big you make the Mac display in space, and you’re not seeing a pixel-perfect 5K image.”

Yet, depending on where the content you’re viewing in the AVP comes from you get different resolution results:

The process appears to be different for bitmaps stored directly on the AVP as I seem to see different artifacts depending on whether the source is coming from an AVP file, a web page, or by mirroring the Macbook (I’m working on more studies of this issue).

Passthrough latency is really good on the AVP (based on earlier reports):

The first study confirms Apple’s claim that the AVP has less than a 12ms “photo-to-photon” delay (time from something moving to the camera displaying it) and shows that the delay is nearly four times less than the latest pass-through MR products from Meta and HTC.

Conclusions from Karl Guttag:

Simply put, the AVP’s display quality is good compared to almost every other VR headset but very poor compared to even a modestly priced modern computer monitor. Today’s consumer would not pay $100 for a computer monitor that looked as bad as the AVP.

For “spatial computing applications,” the AVP “cheats” by making everything bigger. But in doing so, information density is lost, making the user’s eyes and head work more to see the same amount of content, and you simply can’t see as much at once.

This might be a very optics and display centered opinion, though. For the end-user what matters is if using an AVP as virtual display device instead of a set of physical monitors makes sense. Some reports online are very positive about the virtual display potential, others seem critical (heavy headset, neck strain, eye strain, etc).